2020

December

2020-12-31

Linear types in GHC

GHC 9.0 is out with a new highly anticipated LinearTypes extension.

Linear types fall within a category of type systems known as substructural type systems. Such type systems allow for some interesting constraints; for example:

Given two functions that operate on a value, you can enforce that both functions need to be called in a specific order, at the type system.

Given two functions that operate on a value, you can enforce that function A XOR function B is called; one must be called, but both cannot be called.

Substructural type systems have recently gotten increased attention due to being the foundation of Rust's borrow checker. Specifically, Rust's borrow checker relies on affine types, while Haskell now supports linear types. (More details on these below.)

This GHC proposal provides context on the decision to use linear types, and discusses alternative type systems. Specifically, the article draws comparisons to Rust's affine typed borrow checker system. I'd recommend reading the page, but a brief summary would be that affine types are "weaker" (provide less guarantees) than linear types; Rust makes up for the weaker constraints of affine types by 1. disallowing exceptions, and 2. implementing additional checkers like lifetime analysis.

For a more complete understanding, we can take a step back to examine the category of type systems that linear types fall under. These type systems are known as substructural type systems, so named because each of these systems omits a structural rule.

Language type systems are generally concerned with the "kind" or "shape" of values. Substructural type systems extend this concept to validate not just values, but usage: constraints like "the argument of this function must be used exactly once" can be enforced. As a result, the type system to be leveraged to track resource usage (e.g. memory). This can allow for things like easier implementations of concurrency.

Pretty much all languages hold some opinion on tracking resource usage:

C gives the programmer tools like

malloc()andfree(); they're then on their ownC++ adds idioms like RAII, which gives more consistent behavior

Java, Ruby, Python, Javascript, Haskell, etc. use a garbage collector

Rust uses its famed borrow checker, which relies on a mixture of language semantics and substructural type systems

And now, Haskell is giving the option for more fine-grained resource management at the type level with linear types

Linear vs. affine types

Affine type systems are another class of substructural type systems worth examining. Rust's type system is affine, and its borrow checker is predicated on the guarantees provided by the affine type system.

Linear and affine type systems are subtly different:

Linear types: if the result is consumed exactly once, the argument is consumed exactly once.

Affine types: if the result is consumed exactly once, the argument is consumed at most once.

Linear types differ from affine types in that linear types enforce consumption, while affine types simply allow them. This means that affine types can enforce that operation 2 cannot be called before operation 1, but it cannot enforce that operation 2 must be called. Linear types can enforce that operation 2 must be called after operation 1. In practice, this results in stronger guarantees for linear types, with the cost of greater constraints.

This is somewhat abstract, so in plain English: in both linear and affine type system, references go out of scope once they are assigned. For example, if a linear function takes an argument x and either 1) passes x to another function; or 2) does some operation on x and assigns the result to another variable, x will no longer be able to be referenced for the remainder of the function body. This will sound plain to anyone who's fought with Rust's borrow checker before (though, linear functions have the additional constraint that "borrowed" values must be used).

References

GHC linear types proposal - contains discussion on alternate substructural types

On linear types and exceptions - gives a great overview on the complications caused by exceptions, and the differences between linear and affine types

Learn just enough about linear types - a great "shallow dive" (as implied by the author) into linear types

Wikipedia article on structural rules - explains weakening ("use less than once") and contraction ("use more than once"). Both linear and affine types disallow contraction. Affine types allow weakening while linear types do not.

2020-12-25

What I want for my next job

Some characteristics I'd be interested in for my next job, based on my current gaps as an engineer:

NOT purely frontend. This is a prerequisite. I've found that the amount of novel ideas I encounter when working on frontend is very low.

Large company (tens of thousands, with a wide band of seniority). All of the companies I've worked at have been very small (or at least have felt that way). My first company was on the larger side, but the applications team was very siloed and felt like a startup.

Heavily service-oriented architecture. I understand so little about practical implementations of microservices. Service discovery, meshes, cross-process communication...

Ideally multiple frontend applications and libraries, with strong emphasis on design systems.

Incremental improvement of large, pre-existing systems. Nearly all the projects I've ever worked on have been greenfield.

Engineering management. I've dipped my toes into this and hated it, but I can't keep running from this forever. Charity Majors talks about the engineer/manager pendulum, which is a concept I agree with. I've found that the best ICs have management experience, and vice versa.

Things I've learned from my current work experience:

Building applications and systems from scratch.

Ownership of product; a product mindset.

Rapid iteration and pivoting; cutting through uncertainty.

Sole responsibility for ownership and delivery of features.

2020-12-22

For certain high potential companies, IPOs are being delayed for more mega-rounds

While a mega-round obviously doesn't raise as much as an IPO, it gives a longer lifeline with which to increase the value of the company (and the resultant IPO)

This has led to a larger amount of eye-popping IPO valuations (and a longer timeline from founding to IPO)

However, the gains are shifted from the open market (IPO) to VCs and other privileged investors; this also benefits people with better-than-common shares that don't suffer from the dilution caused by these additional rounds

The recent examples of AirBnb and DoorDash's IPO pops show that even despite this unprecedented level of greed, money is still being left on the table; given that we've been seeing more and more mega-rounds, this trend is likely to continue

2020-12-21

I've started trying to take up longform blogging again. Maybe I'll beat my previous score of one article a year.

Recently, I've been very into Cedric Chin's blog Commonplace. I don't always agree with his takes, but his framework for reasoning and his excellent prose are both things I admire and am trying to steal for myself.

November

2020-11-22

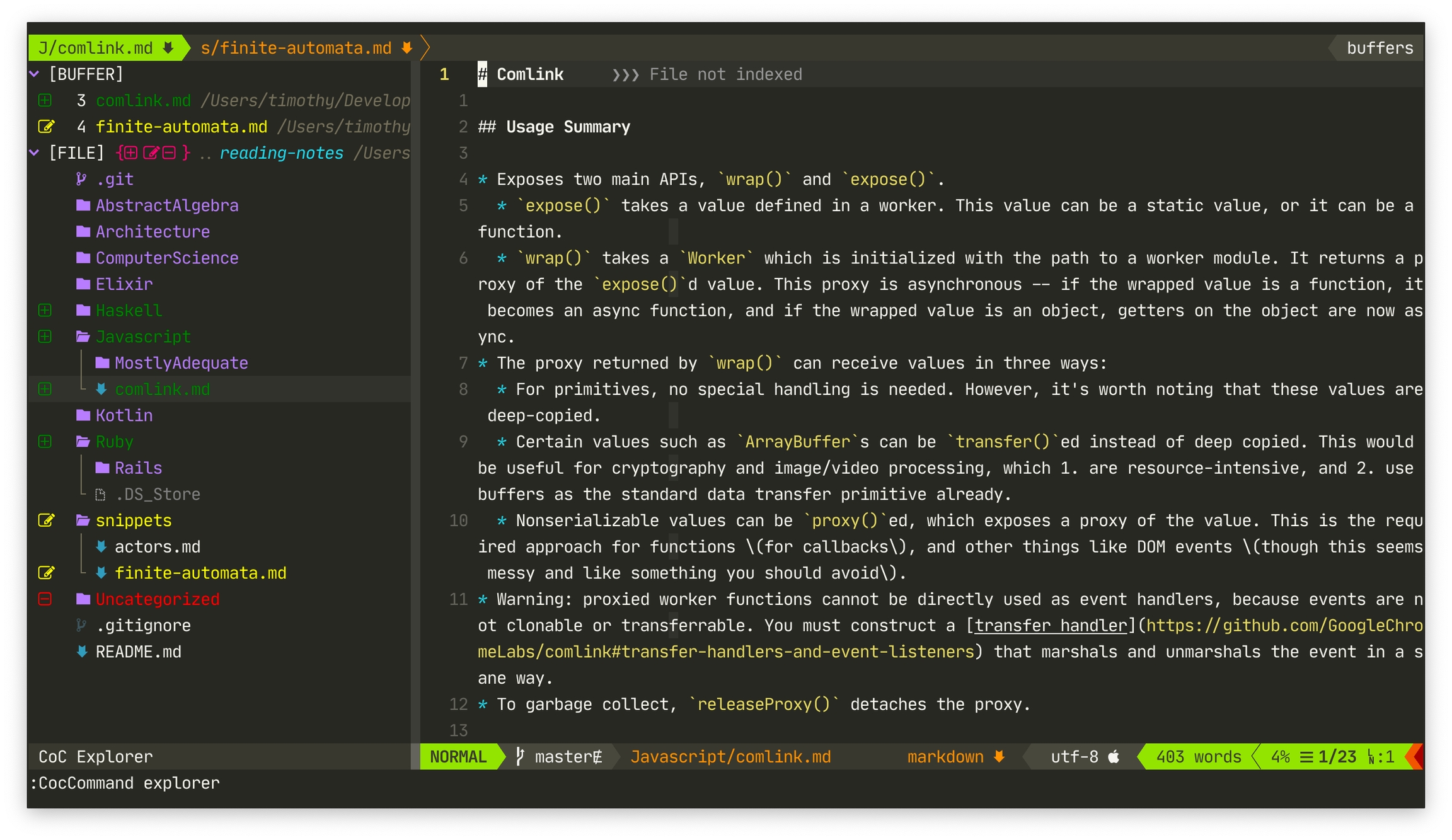

coc-explorer

NERDTree is fairly maligned in the Vim community, but I hadn't found anything approaching the ease of use and customizability... until now. Not only is coc-explorer easier to use, it's faster and more beautiful.

I may be overusing Nerdfonts...

2020-11-21

Tariffs

The simplest explanation for the tradeoff provided by tariffs is that overall economic productivity is worsened in return for the protection of a specific industry. Alexander Hamilton first theorized that the temporary pain of tariffs and increased costs of unoptimized production were worth bearing out to become economically (and hence politically) stable. In other words, it is an interventionary rebalancing that accepts a smaller pie in order to make each slice of the pie more equitable... at least in theory. (Hamilton's report influenced the Whigs, whch influenced Lincoln, who then made the principles laid forth by Hamilton part of the bedrock of Republican ideology, and eventually, that of the American School of economics.)

Like other fledgling nations, China's economy was initially closed and heavily protected by tariffs. Between 1993 and 2005, Deng Xiaoping spearheaded the effort to liberalize China's economy, with one large watershed being China's entrance into the WTO (contingent upon opening its economy). During this period, China eclipsed Japan as the alrgest economy in Asia. Starting from 2005, however, Hu Jintao moved to halt this liberalization. The new administration engaged in aggressive protectionism with the goal of developing "national champions" that could compete with large multinationals. During this time, China also enacted a contractionary money policy, leading to a weaker yuan (and, eventually, the Trump administration's claims of currency manipulation). This trend was continued by Xi Jinping to the present day.

In 2015, the Obama administration started subsidizing solar energy production. This led to an increased demand for solar panels, a large portion of which were sourced from China, increasing the trade deficit. The tradeoff was made that the US would weaken itself economically (simplistically speaking) in order to avert the impending climate disaster. This was also couched in the decade-long belief (or, more realistically, dream) that as China grew economically, it would reverse course and swing the needle back towards Deng Xiaoping's liberalization.

The Trump administration immediately focused on these solar panels as an easy target for their first round of tariffs, mere days after inauguration. Unlike the previous administration, mass death from global warming wasn't high on their list of priorities (not to discount the insane power of the oil lobbying industry, of course). This then extended to steel, an American industry that had been dying a slow death (and, coincidentially, reliably voted red). Lastly, the Trump administration turned their eye towards technology and intellectual property.

The Trump administration believed that China would never play fair, and extending the olive branch with each new administration was the wrong move. In fact, this opinion had been covertly held by both political parties; Chuck Schumer praised Trump's aggression towards China, none of the 2020 Democratic primary candidates committed to reversing Trump's tariffs.

China's relatiation was harshest on America's farmers (with soybeans being notable as the most valuable farm export). Being ardent ever-Trumpers, they buckled down to weather the storm, with tax and tariff revenue acting as a lifeline (as well as increased ability to legally pollute). A year in, cracks were starting to show, with China reporting the slowest quarterly growth in almost 3 decades; however, the trade deficit hit new all-time highs in 2018, and the RMB hit its lowest value against the dollar since 2008, falling to below the historical warning point of 7 USD/CNY.

In January 2020, the "first phase" trade deal was in the works... right in time for COVID to strike. Amid the pandemic, suddenly China was the US's top trading partner again. Despite this, the trade targets were now near-impossible to hit. Several negotiations were in the work, but the Trump administration was too occupied with setting a new record for "biggest landslide election loss." This brings us to the present day. Trump is a lame duck likely to face criminal investigation once leaving office.

As 2020 is the year of hindsight, what was the fallout? Economic growth slowed worldwide in a one-two punch with COVID. Many American industries like lumber slashed employment. Farmers suffered, with many ailing farms being swept aside by massive mega-farms. Many other countries like Canada eagerly stepped in to supply China with farm exports, and others like Vietnam vied for the newly vacant position of the world's factory. China, the paper tiger eager to hide cracks in its armor, cracked down on Hong Kong. Despite growth in protected industries, American manufacturing as a whole lost jobs, and the fabled factory reshoring did not occur. Given that the tariffs hurt Trump's strongest supporters, did this factor into his losing the election?

An incredibly selfish perspective: I don't like Trump. I don't like the Trump supporters hurt by the tariffs. I don't like China. I am not significantly impacted by the slowing of the American economy. Watching them all battle it out in a war of mutually assured destruction has been very, very satisfying for me.

One last aside: I recently learned that James P. Hoffa, the current head of the Teamsters, is the son of Jimmy Hoffa. Yeah, that guy.

November

2020-11-17

Stateless password manager

I was playing around with the idea of a stateless password manager using key derivation.

While traditional password managers allow users to generate unique passwords for services, this password manager skips the manual password generation step using key derivation to deterministically generate a service password from the master password and a unique service identifier (e.g. the name or URL). This allows for a portable, stateless password manager: with just the master password and the service name, the service password can be retrieved.

Here's the basic implementation:

Some thoughts I had in the process of writing and testing this:

Some sort of persisted list of service names is needed for usability. How can this be done in the most portable and secure way possible? An easy answer is an encrypted JSON file, where the values are a salt. The salt can simply be the password rotation count.

The master password represents an even more sensitive single point of failure than traditional password manager master passwords. Because there is no backing service or persistence, there is no way to implement multifactor authentication.

The master password must also have sufficient entropy.

A full list of downsides and background information is presented here. Overall, the approach is interesting, but the strategy has too many flaws.

October

2020-10-30

Software engineering entry points

It seems there are two high-level entry points for modern software engineers.

Bottom-up: the computer engineers. Learned (and/or worked) in environments where code wasn't separable from the underlying hardware. Tends to be older. Focused on kernel, driver, etc. development. More used to patterns that have direct analogues in hardware, such as locks, threads, and interrupts.

Top-down: the product engineers. Learned (and/or worked) in environments with a high level of abstraction. Tends to be younger, and more likely to be self-taught. Focused on application development. More used to high-level patterns.

This might seem like a pointless observation, but I thought it was interesting and worth writing down to mull over later.

September

2020-09-25

Timezone woes

Documnenting this for myself so I stop footgunning myself with this once a year.

.toISOString()returns a timestamp in UTC for bothDateandmoment.Aim to store all timestamps as UTC. This is obvious on the DB layer, but I think it should be done in the UI layer as well, simply to reduce the chance of fucking up. Consistency!

To display values in the local time, wrap the timestamps in

Date()ormoment(). This acts as a view-only transformation layer.Dates should not be timestamps! (It took working with Rails dates for me to realize this...) You don't want off-by-one errors in your dates for a few hours for users (dependent on the UTC offset of their timezone).

2020-09-19

Lots of good discussion in this thread, but this particular comment stood out to me as informative (though with somewhat low signal to noise ratio). Reproduced sans the ones I found opinion-based or just silly:

[Unchecked] exceptions make it difficult to handle nested errors (eg the

catch()itself throws)Unchecked exceptions (and implicit propagation) means any function can throw something, at any time -- it's not defined in the API contract -- so if you really wanted to be safe and general, every function needs have exception checking. This is the same problem that null has.

It also means it can change "under your feet" -- a library can add new exceptions to functions with no indication; this is also possible in rust, but its requires much more finagling by the author.

Checked exceptions like in Java are basically the same as Rust's error-as-enum, but feature the other points.

At least in Java, not all exceptions are checked, but of course that doesn't need to be true.

Through developer ergonomics, Exceptions leads the natural strategy of try it, and rollback on failure. Rust

Result<>leads the natural strategy of validate, then continue. The latter, IMO, is significantly easier/general/more sane.Through developer ergonomics (avoiding a try-catch every statement), multiple statements are naturally rolled into a single try-catch -- undone when this turns out not to be the case. In the worst case, if you try-catch every statement in a function, its completely unreadable. It's also difficult to know which function throws which error, if you have them rolled up in a single try-catch, with multiple catches.

Exception's main job is to put errors in the background; Rust has the philosophy of putting errors in the foreground

The main benefit they have over return codes is that return codes are utterly arbitrary, and even less defined in the API contract, or the language itself, than exceptions. They lose out on the other points -- namely that they're significantly more complex.

Result<>grants the natural ergonomics of return codes, with a higher level of definition than exceptions.Try-And-Rollback is a generally valid strategy for single-threaded code; less so for parallel code (eg fork-and-join).

Result<>fits that model more cleanly.

Additional thoughts:

I'm a huge fan of return value based errors (just no error codes, please...), but I feel the friendliness of such interfaces is highly dependent on how well the language supports higher-kinded type-ish operations in general. There are lots of parallels between null handling and error handling; does this language have equivalent APIs for Maybes and Eithers, given that they are both sum-type based monads? Do I need to import a bunch of stuff to actually work meaningfully with these values, or is it baked into the language itself? How painful is mapping over values, or function composition? Can I flat map?

Rust's organization as a community continues to impress. The decision-making process feels much more open and collaborative than, say, TC39.

2020-09-05

I'm perpetually fascinated by Singapore's transformation from a barren territory, fresh from the occupation of two separate global powers, to a hyper-modern society punching well above its weight on the international scale.

While most articles about Singapore focus on the improbability of Lee's transformation of his nation, this article peels back some of the mythos behind the man, and reveals some of the costs paid (and brutalities committed) to force Singapore from the past into the future.

A seductive assumption underlies the euphoria around the Singapore model: that models of development can be scientific and universal. Those who are afflicted with this euphoria search eagerly for examples of universality. But there is far more to the Singaporean story than mere technocracy. Political strategy and a keen understanding of domestic and international power were central to the success of Lee’s PAP. This allowed him to create the institutional foundations for Singapore’s famous technocratic model. Likewise, there is far more to the rise of China than an imported Singaporean model—a story frequently told by stringing together study-mission statistics and a couple of Deng anecdotes.

That story ignores, for example, China’s decentralized system of de facto fiscal federalism and fierce xian level competition—which have no Singaporean equivalent—because it is inconvenient for their thesis. They sweep aside the fact that the father of Singapore himself and a legion of elite Singapore civil servants could not scale the model under optimal conditions. Some are so oblivious of Singaporean history that they do not even realize they are advocating for a developmental model that contradicts their own ideological views. This analytical trap ends up not understanding Singapore, or China, or arguably the Western development path itself.

Ironically, Lee Kuan Yew himself had no patience for other people’s models. In his words, “I am not following any prescription given to me by any theoretician on democracy or whatever. I work from first principles: what will get me there?” If there is a lesson from Singapore’s development it is this: forget grand ideologies and others’ models. There is no replacement for experimentation, independent thought, and ruthless pragmatism.

August

2020-08-10

Dotfiles

Recently, my work computer was bricked. I timed myself setting it up today, and it took me just over an hour to have my full dev environment set up. A significant amount of time was spent waiting for brew to install stuff, as well as on manual tasks like entering licenses for various desktop apps. Overall I'm super happy with where my setup is at the moment.

July

2020-07-29

React testing patterns evolves really fast, and sometimes I get the feeling that no one really knows what best practices are. This collection of articles beats the odds and very comprehensively covers basically everything you need to know. Highly recommended.

2020-07-24

Cube.js is a microservice that takes declarative, object-based parameters as input and generates SQL that is then used to query a database. It also handles some nice-to-haves like caching, query orchestration, and pre-aggregation.

Pros:

Can allow you to quickly churn out queries on a many fields with a high level of dimensionality for filtering; also has support for highly customized filters

Can declaratively query things that are verbose or tedious to manually write, like rolling windows

Bypasses the web application layer so should have less overhead for certain things (e.g. no need to go through Rails routing logic)

Has built-in caching behavior with expiry periods at the entity level instead of at the query level

Supports pre-aggregations; it even handles maintaining the rollup tables

Can connect to a variety of backends

Multitenancy, multi-DB support

Other random really nice things, such as built-in approximate count distinct via HyperLogLog

Cons:

Produced SQL cannot be hand-tuned

Open questions:

It can handle joins, but I'm fairly suspicious about performance characteristics, and the docs aren't that informative here either

Seems like a good value proposition when we want to offer a kitchen sink approach for dashboards and aren't THAT opinionated about performance, and also don't have a lot of SQL expertise. It is extra beneficial if you have a lot of microservices / databases and can act as an analytics gateway.

2020-07-21

This was an incredible read. Reads almost like a screenplay.

Many of the German scientists were horrified at the idea of a uranium bomb, to the extent that they hoped Germany would lose the way. Many also were happy it had been the US rather than Germany which had dropped the bomb. In addition to the national guilt aspect, there was an opinion that if Germany had developed the bomb first, they would have leveled London and then immediately been destroyed in retaliation.

In fact, the scientists hoped that their repudiation of Hitler and the Nazi party would help them after the war, and welcomed the idea of working with their western colleagues (instead of Russia).

While the nuclear research programs of the various countries were all secrets, all the academics were familiar with their cohorts in their enemy countries, and had been for years before the war.

All the scientists were politically well informed. They also very accurately charted out how the Cold War would unfold (Western powers would use the bomb to pressure Russia until Russia had their own bomb, at which point war would break out again).

[https://en.wikipedia.org/wiki/Otto_Hahn](Otto Hahn) is "considered by many to be a model for scholarly excellence and personal integrity," which is evident from his recorded dialogue.

Academic drama and gossip was real in the 40s as well.

2020-07-17

With this, my primary reservation about Apollo Client has been solved. If only it came a year earlier when I was still using it. Incredible work from the team.

2020-07-16

Product vs. engineering

The younger a company, the more an engineer will be doing product work, and the less they will be doing infrastructure (pure engineering) work. It's a delicate balancing act, however, because infrastructure makes up the foundation of everything. Infrastructure work doesn't give immediate signals to investors and generally take a long time to show returns. And the younger the company, the shorter your time-to-payoff needs to be (which is also the crux of my argument as to why startups shouldn't generally hire junior engineers). You simply don't have the luxury of nurturing long-term value. Thus, startup engineers are given the herculean task of building product while essentially building out a high quality infrastructure "as an aside."

One interesting effect of startup funding is that your runway is very spikey, and as a result, can be accompanied by large shifts in strategy. Thus, a Series B (or later) can be the impetus to kickstart a dedicated engineering infrastructure team.

2020-07-08

Redaction

A common simple way to implement PII redaction is via the following algorithm:

For each model property flagged as redactable, destroy data.

If model has no relations, exit.

If model has relations, recurse.

I've been thinking about a strategy that involves storing a model-level encryption key on all redactable models, and encrypting all PII with it (on top of any other encryption that is done). The first step in the algorithm would then simply be to destroy the PII encryption key.

If there is not a clear overarching parent model, or if you want more granular redaction, each model would need its own key. This means you can't just delete a single key so the number of DB round trips is unchanged. Thus there is no benefit when it comes to atomicity.

One can imagine a scheme for nested models where each child model's key is derived from the parent's key, which would allow for truly atomic redactions AND redaction of specific nested models. However because one of the goals of this scheme is simplicity, this is questionable to do. Cool idea, though.

There is now a single point of failure, which can be both a strength and a weakness. The strength is that only a single key needs to be checked for the redaction operation, making it harder to "forget" to redact some properties. The weakness is that any mistake becomes catastrophic. PII is interesting in that the damaging potential of PII can be thought to scale exponentially with the amount of PII. A birthday or a SSN alone is not that bad, but once an attacker has both, they can do far more damage.

Due to the added encryption at write time, we are trading write performance for redaction (deletion) performance. So this strategy is more beneficial the more expensive deletes are (e.g., if you are storing massive blobs of PII for some reason).

Pros:

Faster redaction with the potential of being atomic.

Cons:

Loss of flexibility. You can't do partial redactions any more (redact specific fields). You can argue you can use the original simple deletion method, but now you have two redaction mechanisms which isn't ideal for an abstraction.

Slower writes.

Conclusion: this can be a neat way to redact atomically if you don't need granular redaction and have very large blobs, but is not a one-size-fits-all solution.

2020-07-06

Evaluating abstractions

I really enjoyed this Quora answer regarding leaky abstractions. Essentially, Guillermo is saying that there are two loose categories of leaky abstractions.

A leaky abstraction is incomplete if it does not fully handle logic, and requires the consumer of the abstraction to handle special cases (and thus concerning itself with the abstraction's implementation). When faced with an incomplete abstraction, one can try to add additional handling to the abstraction, potentially at the cost of simplicity. Alternatively, the base assumptions of the abstraction may simply be invalid, in which case one can rewrite the abstraction to encompass the unhandled case.

A leaky abstraction is invalid if it simply models the world incorrectly. Perhaps assumptions were made that do not actually accurately represent business logic. Despite sunk costs, this abstraction should be torn out and rewritten.

2020-07-05

Thoughts on language affordances

These thoughts are incomplete, and while I write these like conclusions, they're more in-progress trains of thought.

Ruby

Pro: Extreme developer friendliness and the ability to create very declarative interfaces due to how dynamic the behavior is and how flexible duck typing is. Concepts as constraining as interfaces are explicitly rejected.

Con: Due to the hidden complexity of metaprogramming-based system, reasoning about a Ruby-based system is difficult for both static analyzers and for humans. Thus there is a heavy dependence on convention (resulting in heuristics about how things work), and on writing tests for everything. Thus, Ruby requires a higher level of discipline.

Haskell

Pro: Excellent type system that heavily encourages purity and total functions. Very high level of abstraction.

Con: While small systems are easy to encapsulate via types, real-world programs often present complexities that Haskell is not suited to address. The two main options given to developers are 1) relying on unsafe, partial functions, which discards much of the benefits of Haskell; and 2) descending down a rabbit hole of ever-increasing complexity of doing things in a way acceptable to Haskell. Haskell also requires a higher level of discipline (but when compared to Ruby, you literally can't write code that compiles without discipline). (The high complexity floor is actually somewhat similarly to C++.)

Typescript

Pro: Type system designed around pragmatism and productivity. Types are more of a supporting cast member than the main character.

Con: While it's very easy to add typing to projects of pretty much any size, there is a line drawn in the sand regarding how powerful you can get with types. More complex generics, higher-kinded types, etc. are all difficult to implement and/or result in very poor developer experience. At the same time, the type system is lenient enough to let you footgun yourself, making types a burden rather than a benefit.

Other unstructured thoughts

On the scale of flexibity / pragmatism to rigor / purity:

Ruby -> Elixir -> OCaml -> Haskell

Specifically, OCaml discards many of the rules of Haskell for practicality. Lazy is opt-in rather than default behavior; typeclasses / functors etc. are handled via record packing/unpacking, syntax is explicitly design for ease of reading, etc.

2020-07-04

Should make using variables in settings (e.g. for colors) much more sane!

June

2020-06-28

Cross-browser woes

Three frontend facts that wasted a lot of my time yesterday, posting here to hopefully save others some research:

According to the HTML spec,

<input>s are not supposed to accept children. Seems reasonable, except:beforepseudoelements etc. are also treated as children. Only Firefox follows the spec and correctly renders nothing. This is relevant if you want to checkbox-powered sliders — pseudoelements will work in all browsers except Firefox.Most browsers focus buttons when they are clicked. However, this is also not in the spec, and Firefox and Safari will not focus the button on click… but only on OSX and iOS! Firefox on Android or Windows will focus. MDN documents this in the section 'Clicking and focus' (worth noting Safari lists this as a bug).

The above usually isn’t important (you rarely need to track button focus behavior), but there’s one case I ran into. Firefox resolves clicks as dispatch click event => dispatch blur event on prev focused element => resolve

onclick()and dispatch focus event on clicked element (unless it’s a button). Because event dispatches are async, React rerenders can happen between each of these. Combined with the previous Firefox quirk, let’s say you have some component where button focus is relevant (my case was an input-with-button where the button is disabled unless either the input or button are focused), it will break on Firefox… on Apple OSes. (For some reason, Safari works fine — it probably resolves events in a slightly different order).

2020-06-25

Syntax highlighting deep dive

Recently I updated my Vim setup to properly detect syntax for Ruby heredocs based on the delimiter. This led me to dig deeper into how context-based syntax highlighters are implemented in Vim.

Vim has some configuration that is done based on directory name. For example, whereas .vimrc is loaded on program start, config files in ftplugin/ are ran on demand when Vim detects that a file with the given filetype (ft) is opened. Similarly, files in after/ftplugin or after/plugin are loaded after the files in ftplugin/ and plugin/ resolve. The purpose of this is to allow tacking small modifications onto existing plugins.

I wanted to add highlighting to SQL heredocs in Ruby. To do this, I added after/ftplugin/ruby.vim:

References:

May

2020-05-31

Great article from Chris Coyier outlining the new sub-fields of frontend. He breaks it down into design-focused frontend (the “traditional” frontend, focused on HTML/CSS), systems-focused frontend (focused on design systems and component architecture), and data-focused frontend (focused on access and manipulation of backend data, and the implementation of custom business logic).

I’d personally add “infrastructure-focused frontend” as another sub-field as well (focused on issues like bundle optimization, code splitting, server rendering, caching, delivery etc.)

2020-05-17

tl;dr:

--incremental and --noEmit are not compatible, so we emit to a temporary directory. This makes initial build a little bit slower (about 20% for me) but makes all subsequent typechecks about 2-3x faster.

Also, it's halfway through May already. Time flies.

2020-05-09

Nice read about Facebook’s dev philosophies for their recent frontend rewrite. Despite the title, the main focus of this article is data / code loading patterns. Some things that stood out to me:

They invested a lot into lazy loading. They even expanded their GraphQL usage to be able to specify what parts of their JS bundle to load as part of a data query. I don’t think I’ve seen this level of data fetching + code coupling elsewhere.

Looks like Facebook dropper server side rendering entirely (which also makes me curious about the future of React SSR given how much React’s design decisions are influenced by dogfooding). Now, instead of requesting markup with data embedded (SSR) they do a regular JS load and concurrently open a socket to fetch the data needed for the JS before the JS has even finished loading.

The conventional wisdom is to code split at the route level, but they instead code split at the container / presenter level, and then try to make the container as lightweight as possible, which avoids the issue of serial loading (route lazy loads -> container kicks off data fetch -> user needs to wait for 2 loads).

2020-05-01

Still digesting this, but this is prime language nerd material: https://www.unisonweb.org/docs/tour

Video intro: https://www.youtube.com/watch?v=gCWtkvDQ2ZI (one of the most interesting tech talks I've watched recently!)

The language has an incredibly novel "build" system and entirely rethinks how source code is handled. Instead of treating a project as "a collection of text files," a Unison project is a giant, well-typed tree. Basically, all code is stored as a syntax tree and is keyed by the hash of the syntax tree (like Lisp taking to the logical limit). You modify code by "checking out" code from the abstract codebase (which generates a human-readable optimized version of the function from the AST), editing it, and "recommitting" it into the codebase. The codebase itself is basically a Merkle tree where every function's dependency is only tracked via the hash of the AST. The dev experience seems similar to what some companies with huge codebases like Google do, where you never have a complete, human-readable version of the codebase available and you create / update transient local source files that are somehow merged into the codebase.

The whole point of this convoluted system leads to some interesting properties:

It's a functional language with no build step. The "building" happens when you add code to the codebase via the CLI, and then it never needs to be type checked or verified again. If you modify any of its dependencies, it will be automatically updated when you commit the change to the dependency.

The codebase tracks all changes just like Git. Thus, you can essentially git log -p individual functions to see how they have changed over time. This in particular is super amazing to me.

No such thing as dependency version conflicts, because there's no longer a concept of a "namespace" -- in the codebase, everything is essentially in a global namespace and uniquely identified by hash. (Though there will still be semantic conflicts where an old dependency does something differently than a new one, etc.)

Code can be trivially executed across nodes in a distributed system. A single function can bounce execution across different execution contexts (using algebraic effects). When this happens, all required code is dynamically sent to the new context and executed. This is enabled by the fact that code "requirements" are always tracked, given the hashed AST system for referencing code.

"Renaming" functions is trivial, because internally, code references other code not by variable name, but by the hash of the code's AST. You can rename something once, and then every time you "check out" the code, all variable names will automatically be updated. Renaming a variable across an entire codebase will be a single-line diff.

You automatically get incredibly powerful metaprogramming capabilities (like Lisp, but better) since your codebase is a well-typed data structure you can arbitrarily transform.

Searching for things in the codebase is... interesting. You get a lot of really powerful things, like being able to query your codebase to "give me every function with a signature [Int] -> [String]" (give me every function that takes a list of integers and returns a list of strings). But you can't search for snippets of code like you currently can in any other language, and you can't grep or anything -- all searching has to be done through their CLI. (Here's a sample of what a "codebase" looks like.) And it seems like viewing code outside of their CLI like on Github is useless (since it'll just be a tree of hashes), though you can always dynamically regenerate an entire project in Unison code from the AST.

This is still all super experimental and impractical but the ideas explored here are some of the most interesting I've seen w.r.t. language design in a long time.

April

2020-04-21

Given how heavily we leverage AASM, I really should have spent the time to read the docs more carefully months ago.

When defining a state active and an event activate, AASM generates the following methods:

active?- is stateactive.activate!- sendsactivateevent; will error if current state cannot transition with this event.may_activate?- returnstrueifactivate!would succeed. I wish I knew about this one ages ago. I didn't find it because I normally use<TAB>in pry to explore available methods.

Summary:

Emphasize your practical, "boring" skills like accessibility, user testing, benchmarking, etc. over "ivory tower" ones like machine learning. Emphasize interest in product development and management over interest in hard academic topics (cryptography, ML...).

Startups are unfairly biased against enterprise languages. It can help to seek out companies with the same background (e.g. Netflix is heavily invested into Java).

Startups have a ton of variance, making it a numbers game. One prior bad hire who has a similar background as you can lead to you getting unfairly rejected.

As a recruiter, try looking at academic / enterprise programmers and trying to tease out if they have or are interested in the "boring" skills you want.

2020-04-09

Lessons I've learned as a grad school dropout

I dropped out of grad school in December 2015 after two and a half grueling years. I left with practically nothing -- no first author publications, no degree, not even a single completed research project under my name. I hated my life so much that to me, losing a quarter of my twenties was a small price to pay for escape.

Roughly half a year later, I was starting my first day as a "real, professional software engineer." It's been almost four years since then, and I've seen some success in my new environment -- much, much more so than in academia, at least! The further I get from grad school, however, the more I'm able to objectively view my time there. In retrospect, it wasn't a waste at all: I've learned several invaluable things during my time in academia that continue to help me move forwards today.

Learning how to read

It didn't take me long to realize that for my entire life prior to grad school, I had never learned to properly read. While I don't consider myself to be particularly smart, I've always been a (comparatively) fast reader. I was skilled at identifying and extracting nuggets of important information from textbooks. This made me an efficient studier, so I did well on tests throughout school and college.

This model of learning quickly reached its limit in grad school. Research articles are incredibly information dense such that for the most part, every sentence is important, and simply extracting nuggets is not enough. This is also quickly impressed into you by the fact that unlike in college, all papers have a page maximum instead of a page minimum. In addition, the sheer volume of reading that has to be done is several orders of magnitude higher than in college: if you are taking four courses, and each requires five articles per class, and each article is between 10 and 30 pages of minuscule text, you're basically consuming a textbook of highly compressed information a month. Reading is not a sprint, or a timed game of hide and seek. It is a slow, grueling, deliberate marathon. Once you are doing your own research, it's even worse: instead of having a handful of targeted articles prescribed to you, you have to trawl the entire ocean of prior research for the right articles.

When I became a junior engineer, I was stunned by the resistance many seemed to have against reading documentation. "It's too long. It takes too much time to find the information I need. I don't want to waste time going in circles." It was common to see people reaching for a more senior engineer the moment a roadblock was encountered (or worse, settling for a clearly insufficient solution). Even at that time, I strongly believed that the role of a junior wasn't to be as productive as possible or to produce the cleanest work possible, it was simply to learn as much as possible, as quickly as possible. I think I read the React documentation in its entirety at least once a month in my first six months. This was enough to set me apart from my peers and even some of my seniors in terms of subject matter expertise. So much information is readily available, with the only obstacle being volume.

To me, asking a senior and getting a single, targeted answer to a specific problem when a complete guidebook is readily available is akin to choosing a single nugget of insight over an untapped gold mine. The extra minutes or hours (or days, even) I'd spend wrangling with a problem on my own terms? A small price to pay.

Learning how to measure

I'll be frank. I remember very little from the thousands of pages I've read in grad school. I don't think I could give you five hypotheses, methods, and conclusions. I hardly even remember what classes I took. One thing I do remember is the endless debates with my advisor and lab mates about what to measure to support our hypotheses. It's important to measure the right thing.

My field (industrial and organizational psychology) was somewhat unique in its application of abstract psychological principles to real organizations. Unlike in, say, neuroscience, I/O psychologists don't tend to measure psychological phenomena directly. We don't measure stress by swabbing cheeks, or attention by attaching electrodes to people's scalps, or motivation by doing a MRI. This is simply intractable at the organizational scale. Instead, we are constrained to picking good proxy variables, or measurable variables that can act as sufficient substitutes for unmeasurable ones. (You could say that biological phemonena are ultimately proxy variables as well, despite there being less layers of indirection.) When planning a study, it was always a laborious back-and-forth to argue about what real-world outcomes mapped to what psychological principles, how this mapping could be established, and how to actually measure this.

There is a parallel in engineering management. While we can directly measure engineering results by setting monitoring infrastructure, logging, and health checks, any attempt to do the same for productivity and motivation must use a proxy variable. Complicating the issue further is the fact that prior findings in psychological and management science often don't apply well to engineering organizations (especially young ones). The solutions for an organization are often dependent on the unique constraints and resources available to that organization. There is simply too much individual difference in startups. (Though, for the record, I think the higher level the finding, the more generally applicable it is. Conclusions about motivation, stress, and burnout are more universal than conclusions about leadership, performance measurement, and organizational change.)

My paltry 2.5 years studying organization psychology are nowhere near enough to have answers for the management challenges engineering organizations are facing. But they are more than enough to help me realize that these problems are anything but trivial, that anyone who claims to have a one-size-fits-all solution to this challenge is either misguided or has an agenda, that the effectiveness of managers is organization dependent, and that the manager that fits your organization is worth their weight in gold. It's hard as hell to know what to measure, and the answer can be different for every organization. But by knowing how to measure, we can slowly get to the what to measure that is unique to us.

Learning how to be content

The last is the most personal. Complaining around the water cooler or breakfast area is one of the most common tropes in office culture. It was especially prevalent at my first job (and sometimes went on until it was nearly lunchtime), and I've lost many hours of my life listening to people airing out their grievances about anything and everything, from the (lack of) leadership to the dwindling office amenities to the stagnant wages to the workload. They complained about how their old colleague was living it up at that big company in the Bay, and oh, how nice it must be.

And the whole, time, a single thought would resonate through my mind. FUCK OFF!!! No matter how bad my job got (and it got fairly toxic at the end), it never got anywhere near how insanely difficult life in grad school was! I loathed living with four roomates. I loathed $1.39/lb chicken thigh from Walmart. I loathed seeing my friends with jobs enjoying their early twenties, trading their cheap hobbies like basketball for expensive ones like snowboarding. I loathed being seen as some kind of noble 21st century ascetic, foregoing a comfortable life in the pursuit of knowlege. I loathed it all, and threw my academic life in the garbage the instant I had the chance.

What did I trade it for? Certainly not for a job in a gleaming tower where they give out RSUs like candy. I don't have nap pods, and I don't have steak and sushi flown in to the office overnight cross country for lunch. People haven't ever heard of the companies I've worked for. But you know what? As a candidate with zero professional engineering experience, I was getting offers that were 500% my grad student stipend. Workload? As an engineer, you're considered a workaholic if you work on weekends; in grad school, take weekends off and you die. Perks? Okay, the health insurance is less than stellar compared to what students get, but otherwise they're uncomparable.

This isn't to discredit the individual struggles tech workers might face, or to say that the academic lifestyle is normal and not utterly unsustainable. However, to me it's incredibly important to maintain a sense of perspective. The five most valuable companies in the US are tech companies, and they're not shy about showing it. In the struggle to keep up with the Bezoses, it's easy to forget how easy tech workers have it overall. My wife is still in grad school and I live in a college town, so much of my friend group is still tied to academia in one way or another. I see flashes of the struggles I left behind, and it often fills me with guilt seeing how easy my life is in comparison. And it's not pity: ultimately, it's a fear of going back. What I have now, with all its flaws and day-to-day frustrations, is enough.

It's enough.

2020-04-05

Some interesting nuggets here:

Our position is different than the one many new companies find themselves in: we are not battling it out in a large, well-defined market with clear incumbents... and that means we can’t limit ourselves to tweaking the product; we need to tweak the market too.

What we are selling is not the software product — the set of all the features, in their specific implementation — because there are just not many buyers for this software product...

However, if we are selling “a reduction in the cost of communication” or “zero effort knowledge management” or “making better decisions, faster” or “all your team communication, instantly searchable, available wherever you go” or “75% less email” or some other valuable result of adopting Slack, we will find many more buyers. That’s why what we’re selling is organizational transformation. The software just happens to be the part we’re able to build & ship (and the means for us to get our cut).

The reason for saying we need to do ‘an exceptional, near-perfect job of execution’ is this: When you want something really bad, you will put up with a lot of flaws. But if you do not yet know you want something, your tolerance will be much lower. That’s why it is especially important for us to build a beautiful, elegant and considerate piece of software. Every bit of grace, refinement, and thoughtfulness on our part will pull people along. Every petty irritation will stop them and give the impression that it is not worth it. That means we have to find all those petty irritations, and quash them. We need to look at our own work from the perspective of a new potential customer and actually see what’s there. Does it make sense? Can you predict what’s going to happen when you click that button or open that menu? Is there sufficient feedback to know if the click or tap worked? Is it fast enough? If I read the email on my phone and click the link, is it broken?.. It is always harder to do this with one’s own product: we skip over the bad parts knowing that we plan to fix it later. We already know the model we’re using and the terms we use to describe it. It is very difficult to approach Slack with beginner’s mind. But we have to, all of us, and we have to do it every day, over and over and polish every rough edge off until this product is as smooth as lacquered mahogany.

March

2020-03-25

Engineering empowerment

My current company is quite unique compared to previous places I've worked, in that every engineer essentially has carte blanche with regards to how they prioritize their work. This is actually quite a lot more stressful than I imagined it would be, because the onus is on you to make sure you are prioritizing the right thing. In the past, I've always had a project manager steering the ship.

When your peers and your managers expect that you are talented enough to make the right call, you will second guess yourself a lot. Context switching is quite exhausting for me, and I prefer to immerse myself in one project and get it to the magical point where it is "done." However, when there are five or six balls you have to juggle at once, you're always afraid of blocking someone else, or leaving a customer unhappy.

On the flip side, this is a strong indication of trust that the leaders trust the individual contributors enough to give them this degree of responsibility. It's a learning experience, but I feel incredibly lucky to be working where I am now! I recently had a conversation with our CTO that really stuck with me. He said three things, one after the other:

This is probably one of the most highly efficient engineering teams I've worked with in my career.

I think we're operating at... maybe 50% of our possible efficiency.

We can get higher without working longer hours and just working better as a team, I think.

Three bombshells, right after the other. I was struck at how grand and compelling this vision was, and how deep his trust in the team went.

One sub-topic I find interesting is the prioritization of customer requests over foundational work. Customer requests are additive and short term: each completed request improves the relationship with a single customer (and each dropped ball hurts it). On the other hand, foundational work is multiplicative: a strong foundation improves the product for everyone; however, if individual customer requests are dropped while working on foundation, the multiplicand decreases despite the multiplier increasing, possibly resulting in a net loss of value. The gold standard is to find a way to architect customer requests such that they become foundational work, and improve the platform for everyone in a way that ideally cuts down requests in this domain from other customers.

Vim as a code review tool

I'm playing with using my editor as my primary code review tool.

The primary advantage is context. By viewing the diff in your editor, you can easily jump to any definition or type whose meaning isn't immediately clear from the diff. I find that when reviewing code in the browser, I often have to follow along in my editor anyways for full understanding.

Secondly, there's speed. I've used quite a few code review tools in the past, and Gitlab and Bitbucket stand out to me as handling large diffs extraordinarily poorly. In your editor, you can easily hop between files and tabs instantaneously. Directory-wide searching is trivial as well.

Finally, testing ideas is incredibly easy. I often want to double-check the accuracy of some comment I'm leaving, so I have to open my editor and navigate to the file I'm currently viewing on the browser, make my change, and test it. When your editor is your code review tool, you can make your changes the instant they come to mind, with very little friction.

The biggest source of friction I've been running into is leaving comments. Right now I've just been leaving them inline in code, and then doing a diff when complete and copying the comments into the browser. As you can imagine, this is quite annoying.

A more minor grievance is discovery. While Github has a rich command line ecosystem, it's not immediately obvious how I would query and navigate a list of all open PRs on Bitbucket, which is our current code review tool. Currently, I still have to use the browser to actually get to the PR I am reviewing to check it out.

2020-03-24

I strongly agree with this, and am glad that I'm getting some validation about pushing to enforce this on teams I've been on previously.

I've been using CSS-grid even for single-column, two-dimensional layouts, because grid-gap is such a great abstraction and results in incredibly standardized layouts. And it's incredibly easy to add responsiveness if needed -- just tweak the column count based on the page size!

This is a great motivator for me to step up my technical communications skills, though. This article is succinct, yet persuasive and informative..

2020-03-21

Practical explanation of folds

People joke about the monad curse, in that once you understand monads, you lose the ability to explain them in a sensible way. Sometimes this "curse" is genericized to cover any nontrivial functional programming concept.

lexi-lambda seems to be an exception to this rule. Here's a great explanation nested in a code review about when to use each type of fold, with concrete examples: foldl vs. foldr illustrated

2020-03-11

Micro brands revisited

Why all the Warby Parker clones are now imploding

As more brands arise, customer acquisition gets more expensive due to increased advertising costs. Very interesting analogy of "CAC is the new rent".

Digital advertising is less mature (or maybe scaleable) than anyone thought? As these companies scale up, they start finding that physical billboards in high-traffic locations are more effective than digital advertising. Similarly, brands that sought to bypass having to be physically stocked are now fighting for those very spots.

Associated with the above, overly aggressive emphasis on growth due to heavy courting of VCs.

This conclusion somewhat parallels findings from the gig economy:

Tech investments do allow you to cut costs and be more nimble... but seemingly only up to a certain scale.

Part of the reason costs are lower in the first place are solely due to undersaturation of the digital space.

Another interesting case study of Casper as a rare public micro brand follows. I won't summarize it here, but worth a read.

2020-03-08

Vim dispatch

(Seedlings of a future reference page.)

Useful dispatch recipes:

Caveats:

Does not work for tty-needing commands, e.g. tig,

git commit...Does not work with aliases

Note that vim-fugitive already handles pull, push, etc. asynchronously.

January

2020-01-20

Haskell's regexp library

...is probably the most expressive I've ever seen.

There are several interesting things to unpack here. Right off the bat, we have a function with a polymorphic return value in a strict statically typed language. That alone is notable. How is this possible?

Let's take a closer look. When inspecting the type of =~ with :t (=~) in GHCi, we get:

It turns out the return value is a value of types RegexContext and Regex. Without diving deeper into the implementation of these types, we know that either RegexContext or Regex is the source of this magic polymorphism. Specifically, this is implemented using pattern matching: several instances are declared, with different return values based on the type of target, which is the last type parameter of RegexContext. In regular usage, if the expected type of target is clear from context, no type annotation is needed: the type system just "does the right thing."

In an object oriented language, RegexContetxt might be implemented as a class with instance methods like .asBool(), .asTuple(), etc. However, the consumer is still responsible for calling the correct method, whereas here the return type logic lives entirely at the type level! There is no runtime cost at all. This results in a beautifully elegant developer experience.

2020-01-16

A somewhat elegant way to ensure list nonemptiness

When working with lists with an indeterminate length, it often makes sense to treat the list as being of type [Maybe t].

Thus, the following idiom can be used to concisely ensure nonemptiness without using Data.List.NonEmpty or a related type.

2020-01-15

2020-01-04

Slightly modified and added to my dotfiles here, though it's not really a dotfile. TODO: Find a better solution for including self-written scripts and applications for new machine setup.

Source: (Applescript really is the ugliest language ever.)

2020-01-03

Bolster tests while refactoring

I ran into an idea that's novel to me today, that is apparently common in the Haskell community. When refactoring, the old implementation is kept around in its entirety, and then acts as a test model for the new implementation for as long as is necessary. This is slightly more robust than just keeping around some list of fixtures for specs.

This is partially enabled by the Haskell test ecosystem having good DX around autogenerating primitive fixtures and running a barrage of them against your code, but that's a tractable problem in other languages.

2020-01-02

Stripe's ecosystem

As a user, Stripe's accounts ecosystem is a thing of beauty. Pay for something with Stripe once, and if you visit the checkout page of another vendor using Stripe, you are automatically contacted with a verification code that imports your payment details automatically. Shopify's system is somewhat similar but Stripe's implementation is so low friction it actually brought a tear to my eye. Will definitely be drawing inspiration from here for work.

2020-01-01

After months of procrastination, I've finally gotten this to a feature-complete v1 stage. It's a fairly simple Haskell program that analyzes your shell history to help you identify areas you can optimize with aliases.

Last updated